Product Safety Reforms in the UK and Europe – Artificial Intelligence

-

Étude de marché 31 octobre 2023 31 octobre 2023

-

Royaume-Uni et Europe

Most UK product liability and safety law is derived from EU law. Since the end of the UK-EU transition period on 31 December 2020, the legal basis on which the EU-derived law applies in the UK has changed, but for the most part the legislation itself remains in force as retained EU law.

However, 2022 and 2023 has seen the beginning of significant changes in the product liability and safety landscape across the UK and Europe with the EU and UK Governments and Regulators seeking to modernise existing laws and regulations that have been in place for over 35 years and introduce new legislation to significantly bolster regulations on the development and use of software and artificial intelligence.

As part of its digital strategy, the EU wants to regulate, amongst other things, AI to ensure better conditions and regulation for the development of innovative technology.

With this in mind, the EU has proposed the implementation of a three-part model of legislation to address AI via:

a. The AI Act – the focus of which is on the safety of and the prevention of harm by Artificial Intelligence;

b. The proposed AI Liability Directive - A different (but overlapping) route of redress for harm caused by AI; and

c. The proposed amendments to the Product Liability Directive – a route of redress for harm caused by AI and defective products as discussed in our article Product Liability Reforms in the UK and Europe – a changing landscape : Clyde & Co (clydeco.com).

According to the EU, these proposals will 'adapt liability rules to the digital age', particularly in relation to AI. All three legislative pieces (the AI Act, the Product Liability Directive and the AI Liability directive) are designed to fit together to provide different but overlapping routes of redress for consumers when harm is caused by AI systems. This is of particular importance to manufacturers using AI in their products.

In this article, we discuss the use of AI in product manufacturing and the significant legislative change to product liability legislation that is taking place in the EU as a result of the proposed Artificial Intelligence Act and the Artificial Intelligence Liability Directive.

AI and its impact on Businesses

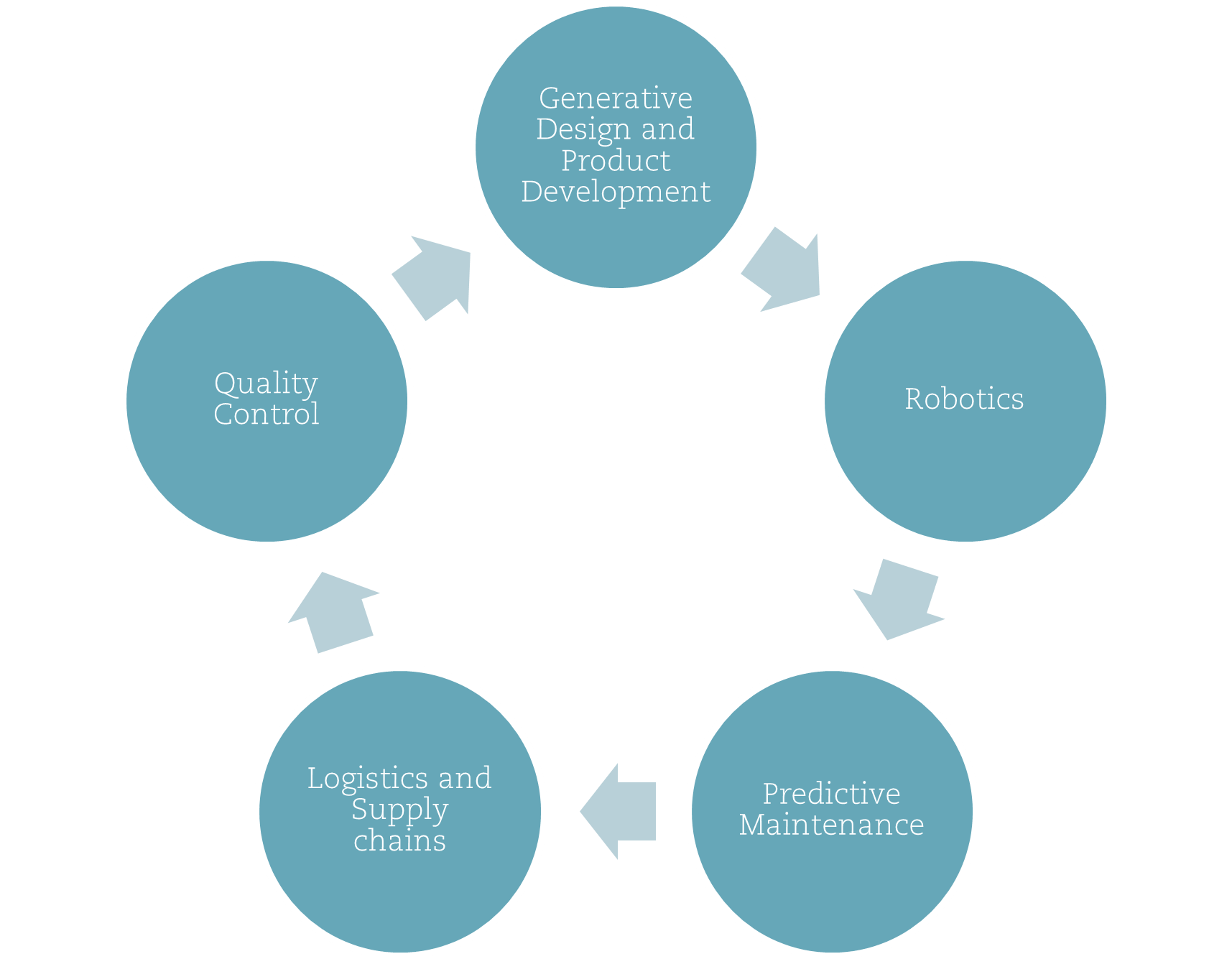

AI is transforming the manufacturing industry exponentially and is becoming increasingly essential to the day to day operations of large-scale manufacturers world-wide in various differing formats. Examples include:

Generative Design and Product Development: AI is being used to mimic the design process used by engineers so that manufacturers can produce numerous design options for one product and then use AR (augmented reality) and VR (virtual reality) to test many models of a product before beginning production.

Robotics: Whilst robots have been used to automate manufacturing in factories for decades, AI is now being used to augment those robots to work alongside employees to streamline manufacturing processes and to engage in tasks such as assembling and packaging products while maintaining the required high standards of hygiene.

Predictive Maintenance: Manufacturers are using AI to understand how, why and when failures and breakdown may occur within their manufacturing machinery so that they can carry out repairs with appropriate resources before a breakdown or to ensure a quick fix during the manufacturing process.

Logistics and Supply chains: With AI, factories can better manage their entire supply chains, from capacity forecasting to stocktaking. By establishing a real-time and predictive model for assessing and monitoring suppliers, businesses can be alerted the minute an issue occurs in the supply chain and can instantly evaluate the disruption's severity whilst also ensuring that they are neither under or overstocked thereby decreasing waste.

Quality Control: Manufacturers are utilising machine vision technology AI systems to spot deviations from the norm that cannot be identified by the human eye to better quality control and enhance shop floor performance in the production cycle.

As a result of the numerous ways in which AI is being used throughout industry, the EU is working on a new legal framework that aims to bolster legislation on the development and use of AI with a focus on data quality, transparency and accountability.

The Proposed EU Artificial Intelligence Regime

The AI Act – A summary

The AI Act was first proposed by the EU on 21 April 2021. On 14 June 2023, changes to the draft AI Act were agreed on and negotiations with the European Council commenced to agree the final form of the proposed draft text of the legislation. This final draft serves as the negotiating position between member states and the EC for the final text. This negotiation process will take time and, even if the AI Act is adopted quickly, it will apply at the earliest in 2025.

The proposed AI Act focuses primarily on strengthening rules around data quality, transparency, human oversight and accountability. If adopted, the AI Act proposes differing obligations and requirements for conformity for developers, deployers and providers of the AI depending on the level of risk from that AI. These risks are currently categorised as:

1. Unacceptable Risk AI – AI systems that are considered a threat to people and which will be prohibited. For example, AI that is used to classify people based on their social behaviour or personal characteristics. This list was expanded in the June 2023 consultation to include:

- Facial recognition and any other form of real-time remote biometric identification systems in publicly accessible spaces;

- Predictive policing systems;

- Biometric categorization systems that use sensitive characteristics of natural persons;

- Emotion recognition systems used in law enforcement, border management, workplace, and educational institutions;

- The creation of facial recognition databases on the basis of indiscriminate scraping of biometric data from social media or CCTV footage; and

- AI systems aimed at influencing voters.

2. High-risk AI - AI systems that pose significant harm to people’s health, safety, fundamental rights or the environment will be considered high risk and will be divided into two categories: 1) AI systems that are used in products falling under the EU’s product safety legislation. This includes toys, aviation, cars, autonomous vehicles, medical devices and lifts and 2) AI systems falling into eight specific areas that will have to be registered in an EU database. All high-risk AI systems will need to be assessed before being put on the market and will also be required to adhere to rigorous testing, proper documentation of data quality and an accountability framework throughout their lifecycle.

3.Limited Risk AI - This AI should comply with minimal transparency requirements that would allow users to know they are engaging with AI and make informed decisions. After interacting with the AI, the user can then decide whether they want to continue using it.

Whilst the AI Act is primarily aimed at the development, deployment and use of AI in the EU, any provider placing products with AI on the market or putting into service AI systems in the EU will be in scope. In addition, any providers and deployers located in third-countries where the output of their systems is intended for use in the EU will also be in scope.

All organisations with products containing AI will need to consider which category of risk their AI falls into and then take appropriate action to ensure compliance under the Act. For example, producers of High Risk AI will need to ensure that they adhere to the testing regimes imposed under the AI Act, document retention requirements and the accountability framework.

If adopted, the AI Act will be directly applicable across the EU (i.e. excluding the UK) without the need of further implementation into Member State law. Obligations imposed on providers, importers, distributors and deployers of AI systems would apply from 24 months following the entering into force of the regulation.

The AI Liability Directive – a Summary

In conjunction with the AI Act, it is the EU’s intention to make sure that persons harmed by AI enjoy the same level of protection legally as those harmed by other technologies. On 28 September 2022, the EC delivered on this objective with the proposed AI Liability Directive.

The AI Liability Directive will apply to providers, operators and users of AI systems.

The purpose of the AI Liability Directive is to make claims easier for those who suffer harm as a result of AI and will supplement the proposed amendments to the Product Liability Directive by laying down uniform rules for certain aspects of non-contractual civil liability for damage caused with the involvement of AI systems.

Key proposals in the AI Liability Directive include:

- Common rules for a non-contractual, fault-based liability regime for any harm caused by AI, particularly high risk AI systems which will work alongside the proposed Product Liability Directive which provides for strict liability for physical harm or data loss.

- Provision for courts in EU member states to compel disclosure of evidence related to “high risk” AI systems (from defendants - namely providers, operators or users of AI systems as defined in the AI Act) if certain conditions are met by the claimant concerned. These conditions apply if the claimant:

(i) presents sufficient facts and evidence to support the claim for damages; and

(ii) shows that they have exhausted all proportionate attempts to gather the relevant evidence from the defendant.

- A rebuttable presumption of causation between the fault of a defendant’s AI system and the damage caused to a claimant in certain situations (i.e. this is not a strict liability regime). The Claimant will need to show:

(i) That the defendant failed to comply with a duty of care which would protect against the damage caused;

(ii) Based on the circumstances it is reasonably likely that the fault of the defendant influenced the output or lack of output produced by the AI system; and

(iii) That the output (or failure to produce an output) of the AI system gave rise to the damage suffered by them.

- If the AI system concerned is ‘high risk’, then it will be a presumed to be a breach of the duty of care owed if it is shown the system or the defendant is not in compliance with the AI Act.

- An extraterritorial effect – the AI Liability Directive will apply to the providers and/or users of AI systems that are available on or operating within the EU market.

The effective date of implementation of the AI Liability Directive is currently unknown. The draft AI Liability Directive still needs to be considered by the European Parliament and Council of the European Union and, unlike the AI Act, If adopted, each Member State (excluding the UK) will need to implement the AI Liability Directive into their respective national law. This will take time and we envisage that the Ai Liability Directive will not be implemented for a number of years.

What about the UK?

The AI White Paper

Following Brexit, the UK is free to establish a regulatory approach to AI that is distinct from the EU and this is evident from its recent approach to AI regulation.

On 29 March 2023 the UK Government introduced a White Paper commenting on its approach towards AI Regulation as “pro innovative.” The White Paper highlights that the UK has been at the forefront of AI progress and has a thriving research base demonstrating its long-term commitment to invest in AI. The overall aim of the White Paper is, therefore, to strike a balance between ensuring that AI does not cause harm at a societal level but, at the same time, not limit the development of AI through too onerous regulation. The comments in the White Paper have been reflected by Rishi Sunak’s on 26 October 2023 when he spoke ahead of hosting the UK’s AI summit on 1 and 2 November at Bletchley Park and announced the creation of an AI safety body in the UK to evaluate and test new technologies but flagged that this body does not mean that there should be a “rush to regulate the sector” stating “...how can we write laws that make sense for something that we don’t yet fully understand…?”

As a result, unlike with the EU’s decision to introduce new legislation to regulate AI, the UK Government is currently providing little guidance on how and on whom liability may rest in the future for harm caused by AI. Instead, it is focusing on setting expectations for the development and use of AI when combined with existing regulators such as the ICO and the FCA in accordance with 5 key principles:

- Safety, security, robustness: AI systems should function in a robust, secure and safe way throughout the AI life cycle, and risks should be continually identified, assessed and manage. Regulators may need to introduce measures for regulated entities to ensure that AI systems are technically secure and function reliability as intended.

- Appropriate transparency and explainability: AI systems should be appropriately transparent and explainable. An appropriate level of transparency and explainability will mean that regulators have sufficient information about AI systems and their associated inputs and outputs to give meaningful effect to the other principles (for example, to identify accountability). An appropriate degree of transparency and explainability should be proportionate to the risk(s) presented by an AI system. The White Paper notes that regulators may need to find ways to support and encourage relevant life cycle actors to implement appropriate transparency measures through regulatory guidance.

- Fairness: AI systems should not undermine the legal rights of individuals and organisations, discriminate unfairly or create unfair market outcomes. The White Paper notes that regulators may need to develop and publish descriptions and illustrations of fairness that apply to AI systems within their domains.

- Accountability and governance: Governance measures should be in place to ensure effective oversight of the supply and use of AI systems, with clear lines of accountability established across the AI life cycle. Regulators will need to look for ways to ensure that clear expectations for regulatory compliance and good practice are placed on actors in the AI supply chain.

- Contestability and redress: Where appropriate, impacted third parties and actors in the AI life cycle should be able to contest an AI decision or outcome that is harmful or creates a material risk. Regulators will be expected to clarify existing routes to contestability and redress and implement proportionate measures to ensure that the outcomes of AI use are contestable where appropriate.

Importantly, the UK Government accepts that the White Paper and proposals are deliberately designed to be flexible – “As the technology evolves, our regulatory approach may also need to adjust”.

What next for businesses?

The benefits of AI are exponential. However, AI can present additional risk to manufacturers, and this has been highlighted by the proposed changes to the liability framework for AI in the EU.

The EU and UK do not currently have a liability framework specifically applicable to harm or loss resulting from the use of AI and, as there are many parties involved in an AI system, determining who should be liable can be problematic. This will be a significant area for future litigation and the AI Safety Summit on 1 and 2 November 2023 may provide valuable insight into how world leaders will mitigate the risks of AI (via legislation or otherwise) with the competing need to encourage development of AI systems.

As a result, businesses (and their insurers) should be proactive in considering:

- How liability may result from a product’s design and / or manufacture - for example a defect in manufacture that causes the product to fail and cause harm/injury.

- Whether the use of AI has increased the risk of liability when compared with the inherent risks in design and manufacturing processes – a risk benefit analysis.

- How AI technologies are being used within their organisation, including how decisions are made and what implications there may be on individuals and documenting the same.

- The range of potential misuse of the AI by clients, end users and third parties. Businesses should consider whether there are any practical steps that can be taken to guard against any such misuse – i.e. by providing user manuals highlighting a narrow 'correct' use and the dangers of misuse and reliance on poor data.

Changes are afoot in the Europe with the proposed AI Act, amendments to the Product Liability Directive and the proposed AI Liability Directive in the EU and with the White Paper on AI in the UK. The ramifications of both differing forms of legislation in the EU are yet to be seen. However, in the event that the legislation is adopted following negotiation, its impact on developers and businesses in the product liability setting when importing products in to the EU will be significant.

The Product Liability landscape of the future will be different from the past as new technology and software comes to the forefront of the collective legislative mind. Litigation in this area will naturally be complex given the interplay of many different actors and should your business welcome an early discussion on the liability issues which are likely to arise please do not hesitate to contact the authors.

Fin